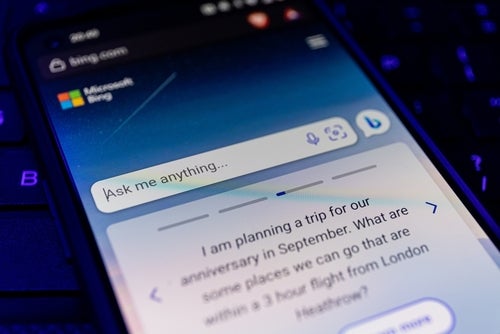

Microsoft has debuted phi-3-mini, a compact large language model (LLM) in its new family of Phi-3 models. A successor to its previous compact model family Phi-2, the software giant claimed in a paper published on Arxiv that phi-3-mini is both small enough to be installed on a phone and equivalent in performance to larger LLMs. In a paper published on arXiv, Microsoft also announced the creation of two larger models in the Phi-3 family: phi-3-small and phi-3-medium variants. The company did not reveal when any versions of Phi-3 would be released to the wider public.

“We introduce phi-3-mini, a 3.8 billion parameter language model trained on 3.3. trillion tokens, whose overall performance, as measured by both academic benchmarks and internal testing, rivals that of models such as Mixtral 8x7B and GPT 3.5,” said Microsoft. “The innovation lies entirely in our dataset for training, a scaled-up version of the one used for phi-2, composed of heavily filtered web data and synthetic data. The model is also further aligned for robustness, safety, and chat format.”

phi-3-mini small enough to be run on a smartphone

phi-3-mini has been trained on a training methodology that utilises heavily filtered training data scraped from the open internet in such a way as to “deviate from the standard scaling laws” that typically govern how the construction of LLMs influences their capabilities. The model performed well in subsequent open-source benchmarking exams, added Microsoft, including the GSM8K arithmetic reasoning test and OpenBookQA, a question-answering dataset designed to assess comprehension.

How well phi-3-mini performs when interacting with the wider public remains to be seen. Microsoft also acknowledged existing weaknesses in the model. Most of these derive from its small size, including phi-3-mini’s restriction to the English language and familiar challenges with hallucinations. Additionally, said Microsoft, “the model simply does not have the capacity to store too much “factual knowledge,” benchmarking poorly against the TriviaQA reading comprehension dataset. It added, however, that this problem may be resolved in future updates that pair phi-3-mini with a search engine.

Huge potential market for compact LLMs

phi-3-mini joins a growing market for compact LLMs, which not only offer accessibility to complex AI systems to users on their local devices but are also easier for technology companies to build and train. Examples of small LLMs include the open-sourced GPT-Neo from EleutherAI, which boasts 2.7bn parameters, Google’s Gemma 2B and Huawei’s TinyBERT.

Earlier this year, The Information broke the news that Microsoft had created a new team dedicated to building compact LLMs. Founder and CTO of Iris.ai, Victor Botev, welcomes this commitment. “Microsoft is wisely looking beyond the “bigger is better” mindset,” says Botev. “Models like Phi-3 clearly demonstrate that with the right data and training approach, advanced AI capabilities need not require building ever-larger models,- a deciding factor for businesses where cost-to-quality ratio is critical.”

In time, Botev anticipates greater specialisation of these compact LLMs as businesses fit them to specific use cases. “To truly unlock the potential of models like Phi-3, the user experience and interfaces must also be specialised for particular domains,” he argues. “Rather than expecting a single generalist model to excel at all tasks, purpose-built interfaces and workflows allow for more targeted implementations. With applications across the sciences, business and more in mind, front-end specialisation is key to realising the power of these nimble yet advanced models.”