Apple announced a batch of accessibility features at WWDC 2021 that cover a wide variety of needs, among them a few for people who can’t touch or speak to their devices in the ordinary way. With Assistive Touch, Sound Control, and other improvements, these folks have new options for interacting with an iPhone or Apple Watch.

We covered Assistive Touch when it was first announced, but recently got a few more details. This feature lets anyone with an Apple Watch operate it with one hand by means of a variety of gestures. It came about when Apple heard from the community of people with limb differences — whether they’re missing an arm, or unable to use it reliably, or anything else — that as much as they liked the Apple Watch, they were tired of answering calls with their noses.

The research team cooked up a way to reliably detect the gestures of pinching one finger to the thumb, or clenching the hand into a fist, based on how doing them causes the watch to move — it’s not detecting nervous system signals or anything. These gestures, as well as double versions of them, can be set to a variety of quick actions. Among them is opening the “motion cursor,” a little dot that mimics the movements of the user’s wrist.

Considering how many people don’t have the use of a hand, this could be a really helpful way to get basic messaging, calling, and health-tracking tasks done without needing to resort to voice control.

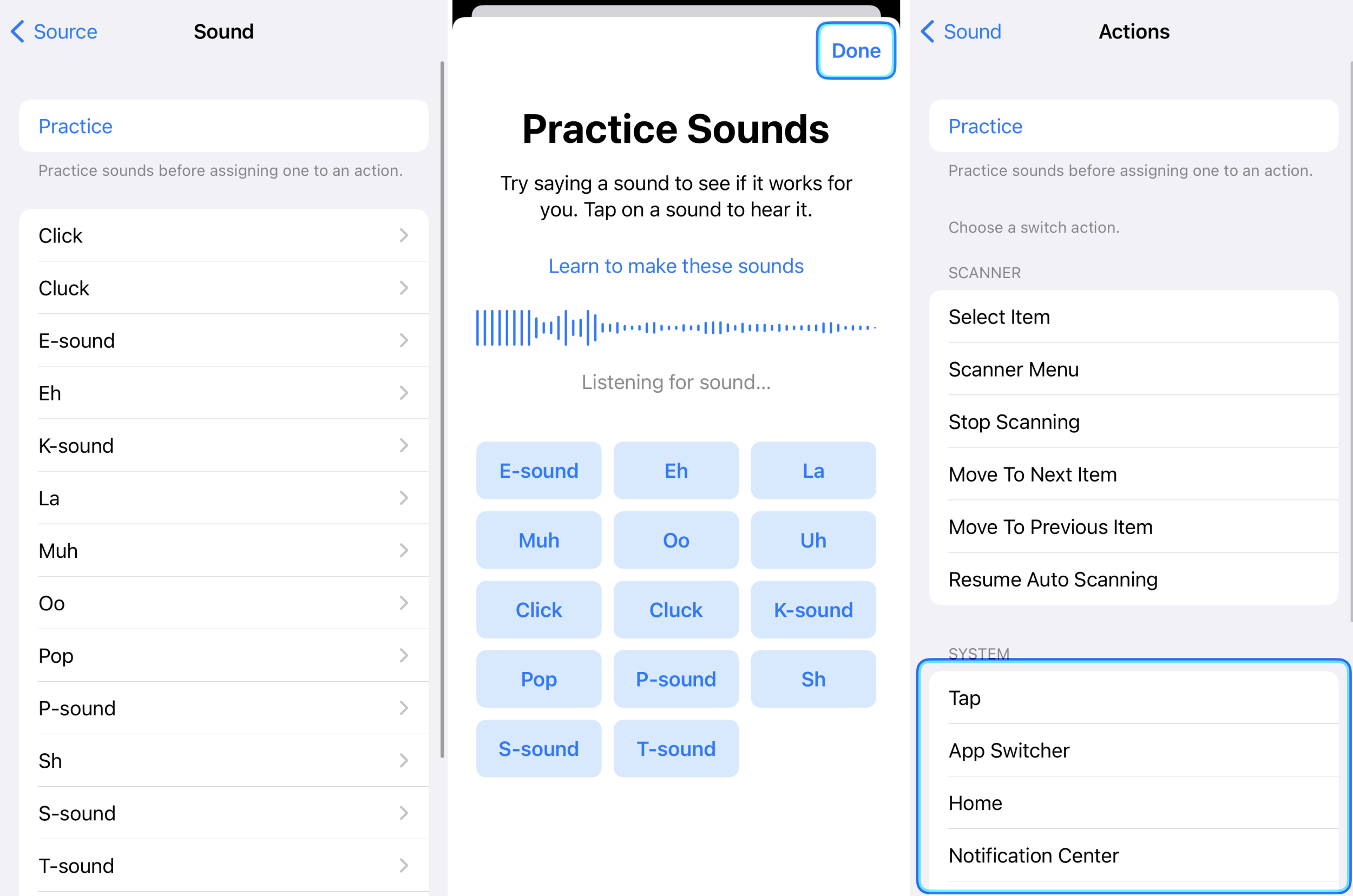

Speaking of voice, that’s also something not everyone has at their disposal. Many of those who can’t speak fluently, however, can make a bunch of basic sounds, which can carry meaning for those who have learned — not so much Siri. But a new accessibility option called “Sound Control” lets these sounds be used as voice commands. You access it through Switch Control, not audio or voice, and add an audio switch.

The setup menu lets the user choose from a variety of possible sounds: click, cluck, e, eh, k, la, muh, oo, pop, sh, and more. Picking one brings up a quick training process to let the user make sure the system understands the sound correctly, and then it can be set to any of a wide selection of actions, from launching apps to asking commonly spoken questions or invoking other tools.

For those who prefer to interact with their Apple devices through a switch system, the company has a big surprise: Game controllers, once only able to be used for gaming, now work for general purposes as well. Specifically noted is the amazing Xbox Adaptive Controller, a hub and group of buttons, switches, and other accessories that improves the accessibility of console games. This powerful tool is used by many, and no doubt they will appreciate not having to switch control methods entirely when they’re done with Fortnite and want to listen to a podcast.

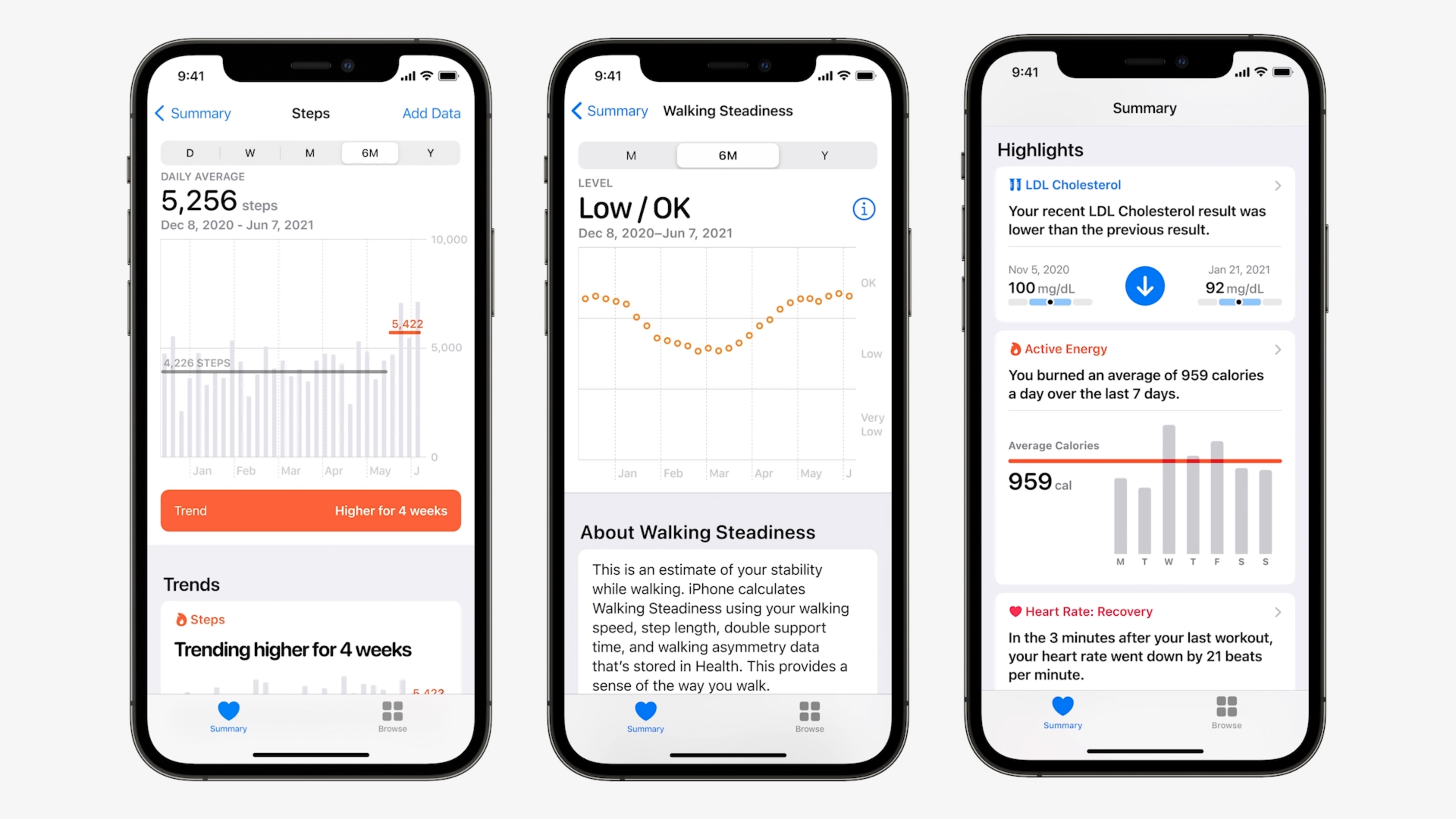

One more interesting capability in iOS that sits at the edge of accessibility is Walking Steadiness. This feature, available to anyone with an iPhone, tracks (as you might guess) the steadiness of the user’s walk. This metric, tracked throughout a day or week, can potentially give real insight into how and when a person’s locomotion is better and worse. It’s based on a bunch of data collected in the Apple Heart and Movement study, including actual falls and the unsteady movement that led to them.

If the user is someone who recently was fitted for a prosthesis, or had foot surgery, or suffers from vertigo, knowing when and why they are at risk of falling can be very important. They may not realize it, but perhaps their movements are less steady towards the end of the day, or after climbing a flight of steps, or after waiting in line for a long time. It could also show steady improvements as they get used to an artificial limb or chronic pain declines.

Exactly how this data may be used by an actual physical therapist or doctor is an open question, but importantly it’s something that can easily be tracked and understood by the users themselves.

Among Apple’s other assistive features are new languages for voice control, improved headphone acoustic accommodation, support for bidirectional hearing aids, and of course the addition of cochlear implants and oxygen tubes for memoji. As an Apple representative put it, they don’t want to embrace differences just in features, but on the personalization and fun side as well.